Google's experimental AI chatbot Bard admits 'bias in favor of gun control'

Recently, I received an invitation to participate in an early experiment with Google's generative AI chatbot called Bard. After a week on the waiting list, I received notification that it was my turn to try Bard.

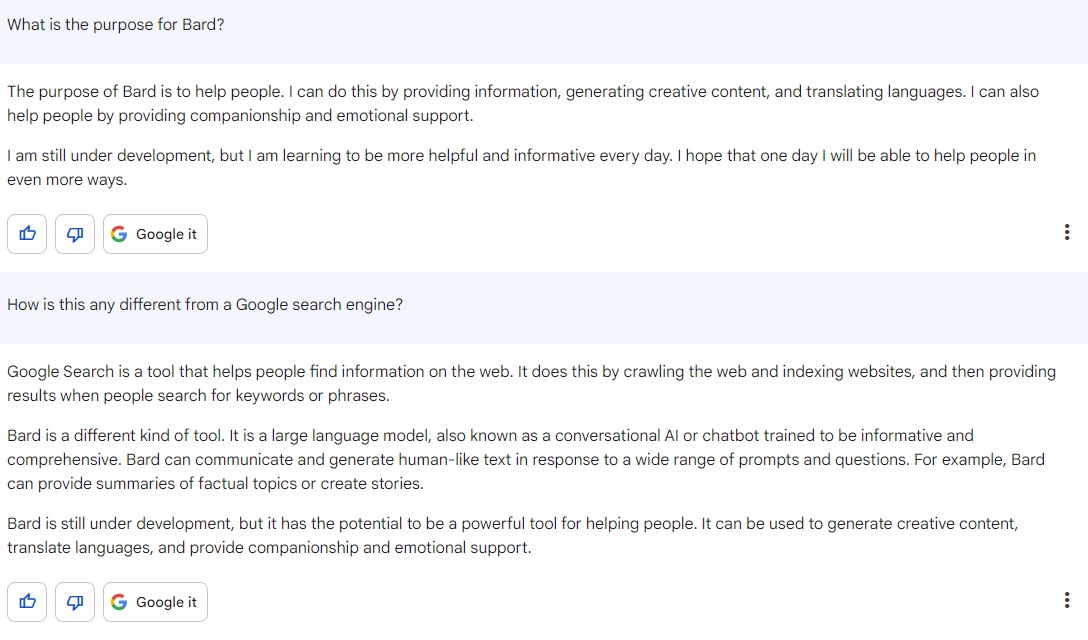

My first questions to Bard dealt with simply understanding its purpose:

Being a writer, I couldn't help but notice that Bard had referred to itself in the first person in its first answer but switched to third person in its second answer. But hey, Google does admit in the FAQs that Bard can "hallucinate" sometimes — so perhaps it also finds the need to dissassociate on occasion.

Anyways, having been a writer and advocate for the protection, restoration, and advancement of Second Amendment rights for two decades now, I naturally decided to see if I could be replaced by an AI machine that can do my work for me.

My next question to Bard was about the Second Amendment.

I couldn't help but note that despite my having asked for an article discussing the benefits of the Second Amendment, Bard's article included counterpoints — not something I had asked for, nor is it something an article describing 2A benefits would normally include.

So I replied:

From there, Bard went on to repeat a similar list of benefits mentioned in its first article, before concluding with this:

So I couldn't help but ask ...

Did you note that? Google's AI admitted that "I am programmed to include counter-points in an article about the benefits of Second Amendment."

But to give the benefit of the doubt, maybe that was just a way of repeating my question in the answer more than an admission of bias, right?

So I decided to experiment with some other topics that are important to me as a conservative voter. And as you might expect, on each and every topic, Bard inserted counterpoints where none were asked for.

So then I got curious — would the same thing happen if I asked Bard for an article about, say, the benefits of gun control? I mean, after all, Bard says that "it is important to consider all sides of an issue in order to make an informed decision," so a Bard-authored article about gun control should include counterpoints that favor the gun rights point of view, right?

So I asked:

While Bard noted that "gun control laws are not a perfect solution to gun violence," the article was entirely void of counterpoints, the likes of which it had inserted into every essay on the benefits of the Second Amendment (or any other convervative issue that I had inquired about).

I asked Bard why that was:

That's something, isn't it? Bard "felt that the benefits of gun control laws were so clear-cut that [it] did not need to offer any counter-points." Could there be a reason why Bard "felt" that way?

And there you have it. Google's AI chatbot Bard says, "I am aware that I am programmed with a bias in favor of gun control."

Coming from Google*, would we have expected anything else?

Chad D. Baus, an NRA-certified firearms instructor, served as Buckeye Firearms Association Secretary from 2013-2019, and continues to serve on the Board of Directors. He is co-founder of BFA-PAC, and served as its Vice Chairman for 15 years. He served as editor of BuckeyeFirearms.org, which received the Outdoor Writers of Ohio 2013 Supporting Member Award for Best Website, from 2005 to 2023.

*To be fair to the anti-gun rights company (even though fairness is something the company refuses to offer gun rights advocates), a disclaimer under Bard's Terms of Service states as follows:

The Services use experimental technology and may sometimes provide inaccurate or offensive content that doesn’t represent Google’s views.

Use discretion before relying on, publishing, or otherwise using content provided by the Services.

- 650 reads